|

Xiyi Chen I am a second-year PhD student in Computer Science at the University of Maryland, College Park, advised by Prof. Ming Lin. I also work closely with Prof. Ruohan Gao. Before that, I obtained my master's degree in Computer Science at ETH Zürich, where I worked on human avatar synthesis and human mesh recovery, advised by Dr. Sergey Prokudin and Prof. Siyu Tang. I obtained my bachelor's degree in Computer Science also at Maryland. Back then, I worked with Prof. David Jacobs on surveillance-quality face recognition. My research interests lie at the intersection of computer vision and computer graphics, focusing on human avatar reconstruction and 3D/4D scene reconstruction and generation. Email / Github / Google Scholar / LinkedIn / Gallery |

|

News

|

Publications |

|

SonoWorld: From One Image to a 3D Audio-Visual Scene

Derong Jin*, Xiyi Chen*, Ming Lin, Ruohan Gao CVPR, 2026 project page / arXiv We propose the first framework that generates an explorable 3D scene and semantically grounded spatial ambisonic audio from a single image for free-viewpoint audio-visual rendering. |

|

HART: Human Aligned Reconstruction Transformer

Xiyi Chen, Shaofei Wang, Marko Mihajlovic, Taewon Kang, Sergey Prokudin, Ming Lin ArXiv, 2025 project page / arXiv We proposed a unified framework for clothed human reconstruction, SMPL-X estimation, and novel view synthesis from sparse-view, uncalibrated human images. |

|

SplatFormer: Point Transformer for Robust 3D Gaussian Splatting

Yutong Chen, Marko Mihajlovic, Xiyi Chen, Yiming Wang, Sergey Prokudin, Siyu Tang ICLR, 2025 (Spotlight Presentation) project page / arXiv / code We analyzed the performance of novel view synthesis methods in challenging out-of-distribution (OOD) camera views and introduced SplatFormer, a data-driven 3D transformer designed to refine 3D Gaussian Splatting primitives for improved quality in extreme camera scenarios. |

|

Morphable Diffusion: 3D-Consistent Diffusion for Single-image Avatar Creation

Xiyi Chen, Marko Mihajlovic, Shaofei Wang, Sergey Prokudin, Siyu Tang CVPR, 2024 project page / arXiv / code We proposed the first diffusion model to enable the creation of fully 3D-consistent, animatable, and photorealistic human avatars from a single image of an unseen subject. |

Projects |

|

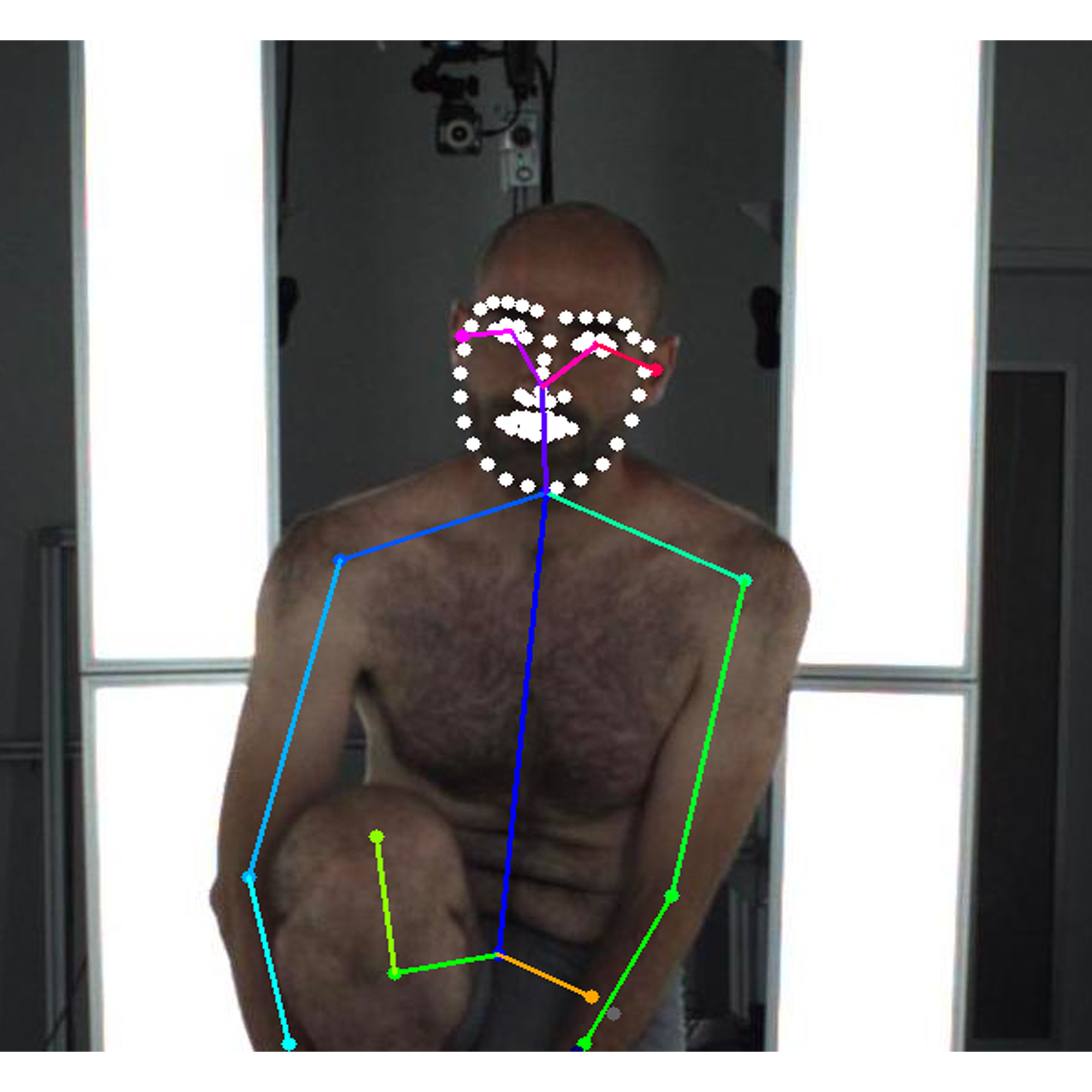

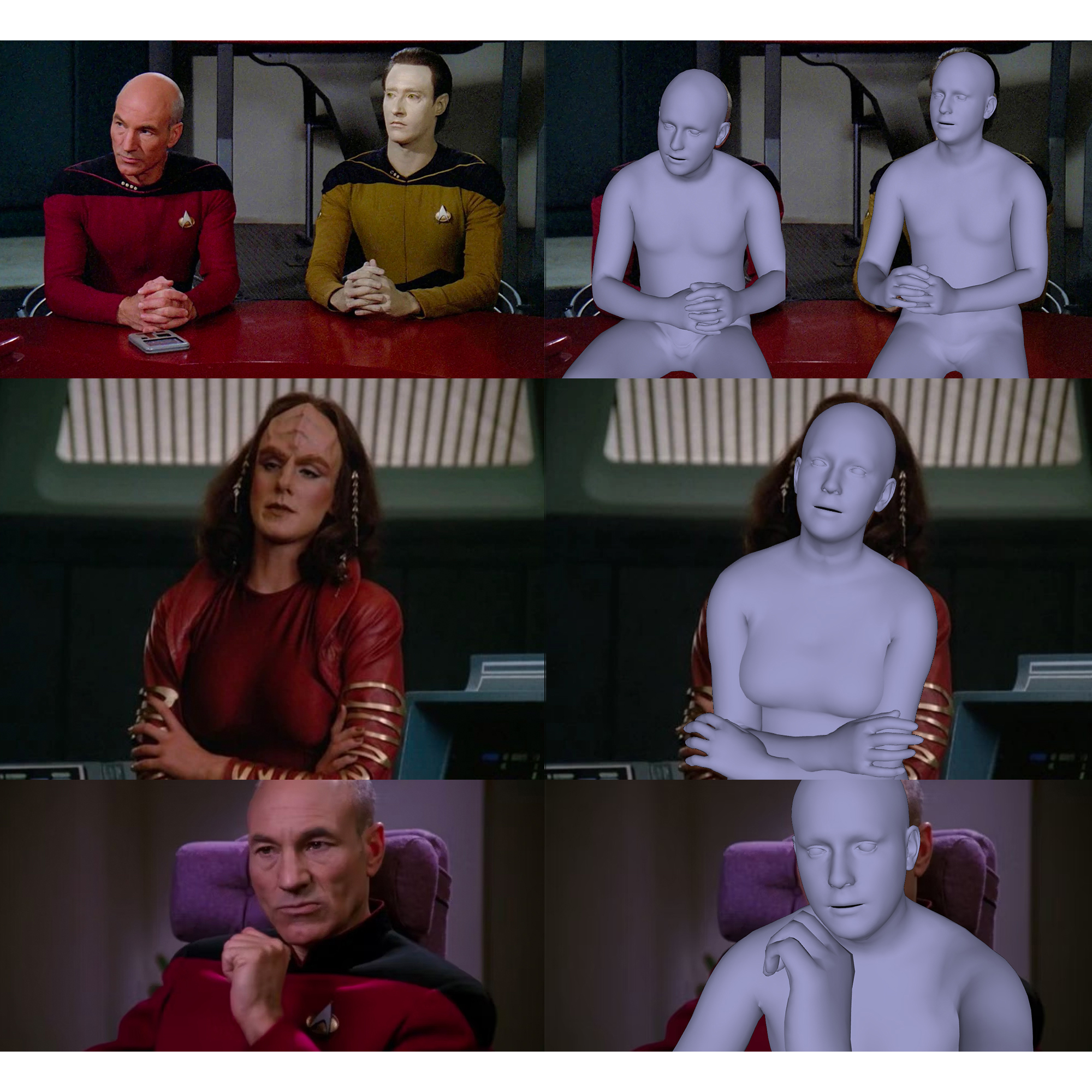

Towards Robust 3D Body Mesh Inference of Partially-observed Humans

Semester Project at Computer Vision and Learning Group report / slides / code We improved SMPLify-X optimization pipeline by applying keypoints blending and a stronger pose prior for robust human mesh inference on partially-observed human images. |

|

Leveraging Motion Imitation in Reinforcement Learning for Biped Character

Final project for Digital Humans report / code We reproduced the imitation tasks in Deepmimic with a curriculum training strategy to extend our algorithm’s applicability to various biped robots with different shapes, masses, and dynamics models. |

|

Learning to Reconstruct 3D Faces by Watching TV

Final project for 3D Vision report / code We proposed to use the abundant temporal information from TV series videos for 3D face reconstruction, by modifying DECA's encoders and include bidirectional RNN based temporal feature extractors to propagate and aggregate temporal information across frames. |

|

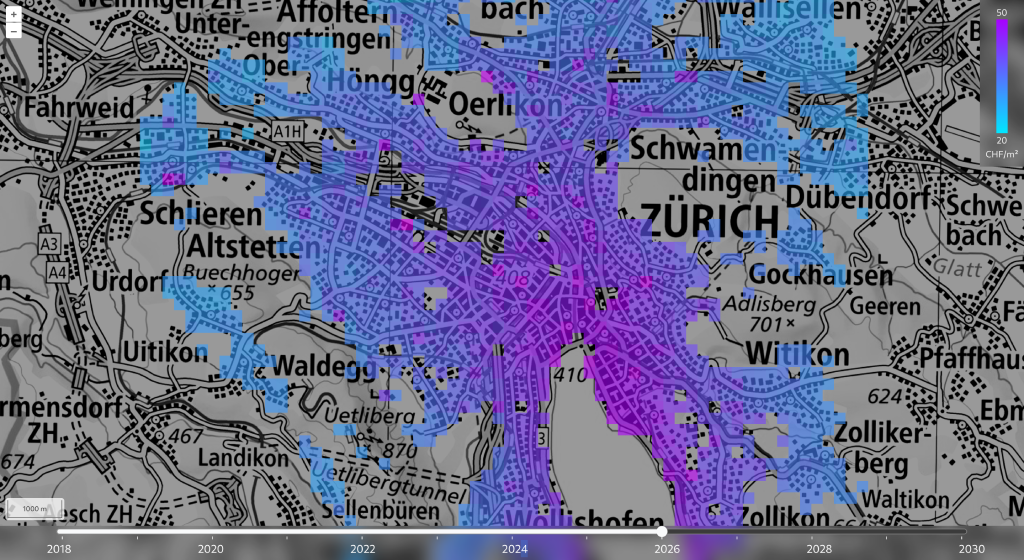

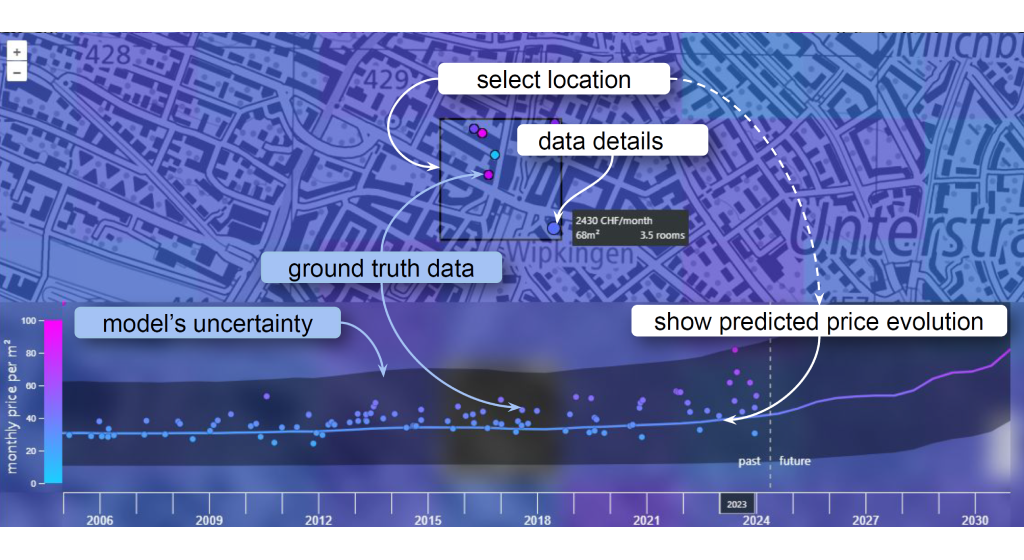

Gentrification Exploration in Zürich

Final Project for Interactive Machine Learning: Visualization & Explainability demo / report / poster We designed an interactive machine learning tool with Variational Nearest Neighbor Gaussian Process (VNNGP) model to uncover and visualize pricing dynamics and gentrification developments in the housing rental market of Zürich. |

Services

|

Awards

|

|

Template credits: Jon Barron |